There is an axiom in computer science that today, with the arrival of Large Language Models (LLMs), is more critical than ever: Garbage in, garbage out.

When implementing Retrieval-Augmented Generation (RAG) systems, the architecture that allows connecting an AI to a company’s internal documentation, we often encounter a frustrating problem: the AI responds with disjointed sentences, contradictory data or simply ignores information we know exists.

The usual reaction is to question the model’s intelligence. The technical reality, however, is often different: the model has ingested a document full of structural noise and has lost the ability to discern the relevant signal.

Imagine handing a financial analyst a 100-page report where paragraphs are scrambled, watermarks obscure key figures and irrelevant footnotes interrupt the main sentences. The analyst will inevitably fail. The exact same thing happens to AI.

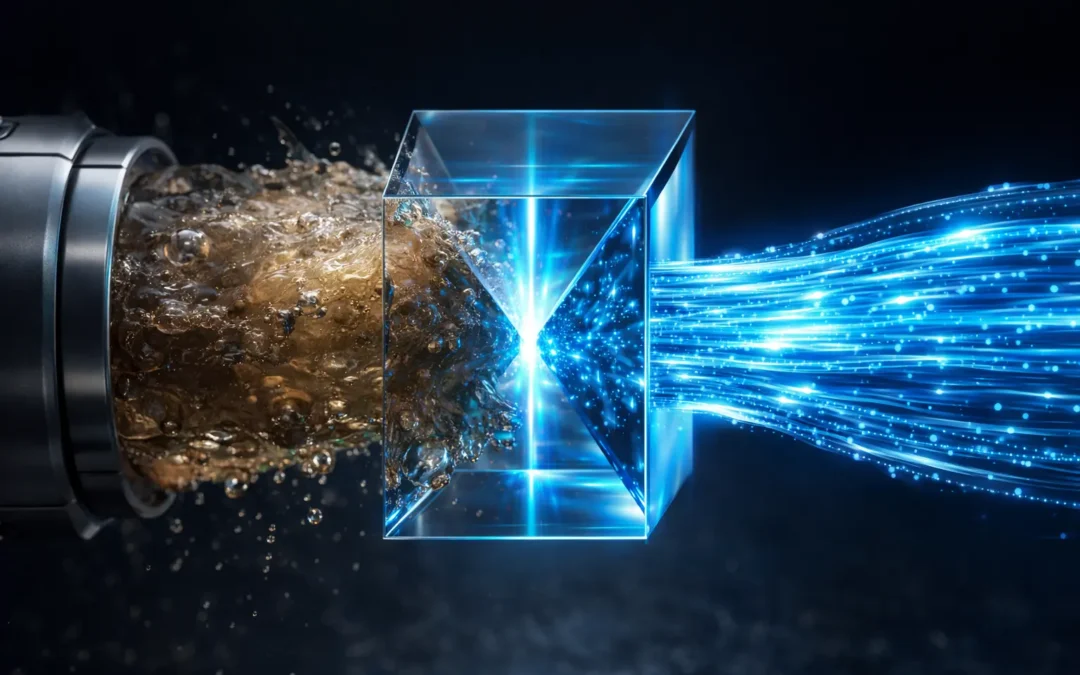

The fundamental paradigm shift is speed. It is no longer viable to clean data once a month in a Data Warehouse using batch processes. AI consumes information in real-time. Therefore, the architecture requires a data treatment plant that operates the very instant information travels toward the model, purifying it before indexation.

On-the-Fly Cleaning Protocols: Pre-processing Architecture

To guarantee the integrity and accuracy of responses in a corporate environment, any robust data architecture must mandatorily implement these 4 filtering protocols before vectorization:

- Visual Noise and Structure Sanitation

Corporate documents (especially PDFs and emails) are saturated with elements that are useful for human reading but toxic for AI: repetitive headers, page numbers, legal disclaimers at the bottom of every email or watermarks.

- The Technical Problem: If a model reads Confidential – Page 12 in the middle of a logical sentence, semantic continuity is broken. The model interprets that text as part of the content, degrading response quality.

- The Strategy: Implementation of cleaning scripts based on regular expressions (Regex) and layout analysis. The system must identify and surgically prune these repetitive patterns before the text is processed. Eliminating this noise increases context comprehension by up to 40%.

- Semantic Fragmentation (“Chunking” Strategies)

AI models have a limited attention window; they cannot read an entire library at once. Information must be divided into processable fragments or chunks. The most common error in failed projects is cutting text arbitrarily every 1000 characters.

- The Technical Problem: A blind mathematical cut can split a vital sentence or separate a question from its answer, leaving the model without the necessary context to reason.

- The Strategy: Use semantic segmentation. The algorithm should not count characters but identify linguistic structures: periods, paragraph changes, or thematic sections. Additionally, a sliding window technique (overlap) should be applied, where the end of one fragment repeats at the beginning of the next, ensuring no idea is lost in the cut.

- Metadata Enrichment for Hybrid Search

Sometimes data is clean but ambiguous due to a lack of context. If the AI ingests a fragment stating Revenue rose by 5%, but doesn’t know which year, department, or region it refers to, that information is statistically useless.

- The Technical Problem: Vector search (semantic similarity) alone can retrieve similar but irrelevant data (e.g., revenue data from 2019 when asked about 2023).

- The Strategy: Metadata injection during ingestion. Before saving the fragment, an invisible digital tag with key attributes is attached: [Year: 2023, Dept: Sales, Type: Quarterly Report]. This allows for Hybrid Search, filtering first by exact metadata and then searching for similarity within the content, guaranteeing surgical precision.

- Entity Normalization and Temporal References

Natural language is imprecise. Documents are full of relative references like last month, yesterday or this quarter. If the AI reads that document a year later, the information will be erroneous.

- The Technical Problem: Temporal debt in data. A 2022 document stating prospects for next year are good will induce error if consulted in 2024.

- The Strategy: Real-time entity resolution. During processing, the system must detect relative temporal references and convert them into absolute ones (changing last month to October 2023) based on the document’s creation date. Similarly, acronyms and entity names must be standardized to avoid conceptual duplication.

Data Hygiene as a Competitive Advantage

The battle of generative AI isn’t won by acquiring the model with the most parameters but by building the most rigorous filtering system.

A corporate AI architecture is comparable to a high-precision industrial process: the quality of the final product (the business decision) is directly proportional to the purity of the raw material (the data). Investing in real-time data hygiene is not an operating cost; it is the only guarantee that the investment in AI will offer returns instead of risks.

Does your corporate assistant offer inconsistent answers despite having access to all documentation? The problem is rarely the AI; it is usually the data diet it consumes. At IntechHeritage, we audit and design the data pipelines that guarantee reliable answers.